1. Introduction to SageMaker ML Storage

Proper storage strategies are vital to effective and efficient ML workflows. SageMaker pipelines rely heavily on SageMaker ML storage. Data access speed directly depends upon the storage mechanism used, which affects training. Consequently, any poor storage decisions may result in slow data access and poor reliability. This can significantly slow model training and lead to potential model failures. Many ML workflows consume large datasets, which require not only reliability but also scalable storage solutions.

SageMaker ML storage also needs to manage data versioning to ensure reproducibility. Additionally, it is essential to secure any sensitive data; failure could have potentially serious legal ramifications.

We must consider storage practices not only for training data but also for inference workflows and model storage and versioning. Many applications have data streaming in real time, making sound storage strategies crucial for inference pipelines.

This article covers the main SageMaker storage types, best practices for cost-effective storage, and tips for speeding up your ML workflows.

If you’re starting out with SageMaker, then please check out our foundational guide: SageMaker Overview for ML Engineers.

2. Why Storage Matters in Machine Learning

ML models make inferences from the data that is presented to them, and data is also used for their training. Therefore, they need to have timely and reliable access to both training and inference data. SageMaker ML storage must deliver data fast enough to keep up with ML processing speeds. We must also ensure that these systems are reliable while balancing their costs

Machine learning algorithms like those in SageMaker can fail due to slow data retrieval for several reasons. These include timeouts when the training process waits for data, especially in cloud environments with time and cost constraints. It can also cause data-loading failures in distributed processing where multiple nodes need synchronized access. They are also responsible for memory overload due to inconsistent streaming filling up buffers.

Other poor storage choices include non-optimized storage volumes that increase inference latency and performance degradation. Lack of version control forces data scientists to waste time manually managing datasets. Also, fast access storage is a poor choice for infrequently accessed data, leading to poor cost management.

SageMaker ML storage leverages AWS storage solutions to provide storage commensurate with the full range of SageMaker workloads. These range from training through to inferences.

3. Storage Options in SageMaker

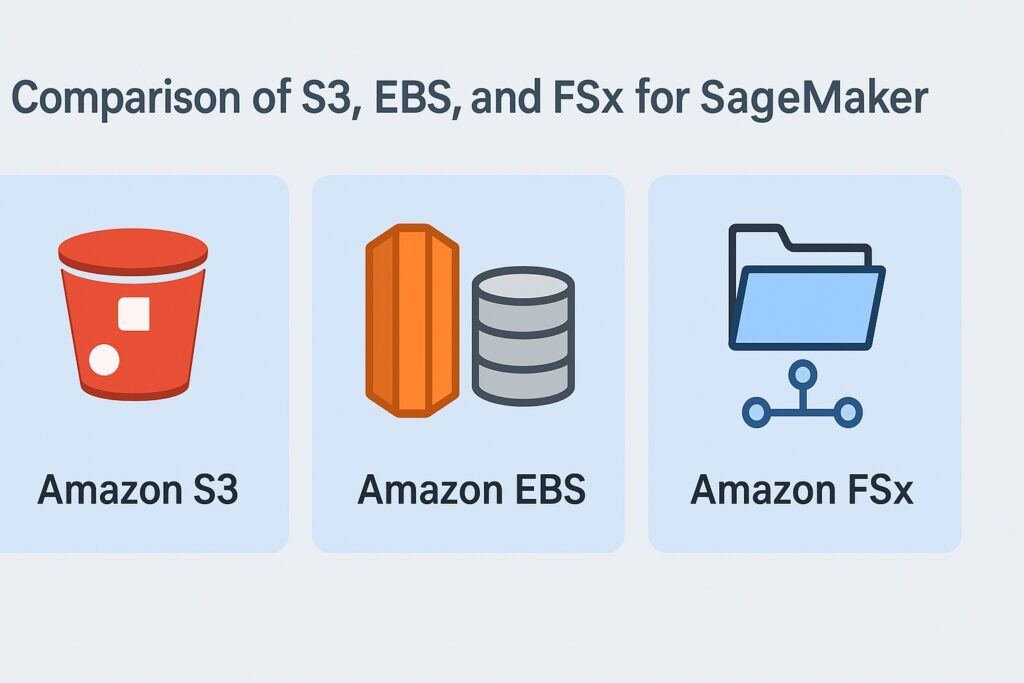

SageMaker ML storage includes several AWS storage services as options based on access speed versus cost. The key advantage for each of these options is that they and SageMaker seamlessly integrate into the AWS infrastructure. This reduces any potential latency while minimizing management and configuration complexity.

3.1 Amazon S3 – The Scalable Core

Amazon S3 is a core SageMaker ML storage component and serves as a highly versatile general-purpose storage service. Therefore, it suits many applications, including SageMaker ML. It provides sufficient read/write access speed that makes it the default storage mechanism for the majority of SageMaker applications. Therefore, we should only consider other services whenever S3 does not match data access speed requirements.

S3 offers several storage classes that trade off data access speed with cost and match different use cases. Its standard storage class is S3 Standard, which has the fastest access speed but is the most expensive. We should use this for frequently accessed ML training data. For storing infrequently accessed long-term data, we should use S3 Glacier. A suitable use case is for training data for deployed models for auditing and troubleshooting.

3.2 Amazon EBS – Fast Volumes for Processing

While S3 offers good value for performance, there are use cases that demand faster access speed from SageMaker ML storage options. There are still many training jobs that require data access speeds that S3 cannot provide, and therefore, S3 is not a suitable choice. Also, training activities with user interactions like SageMaker Notebooks need faster data access than what S3 can deliver.

Amazon Elastic Block Storage (EBS) is a versatile storage option offering different levels of speed versus cost. It provides the data access speed that these applications need but at a suitable price. EBS’s main disadvantage is that you must pay for the storage space even when stored data is a fraction of that. Engineers must carefully plan their storage needs when using EBS.

EBS is applicable inference jobs processing streaming data where low latency is essential. A good example is real-time fraud detection, which continually processes transaction data. Interactive AI-powered applications also require low latency for data access when they must respond instantly to user requests.

3.3 Amazon FSx for Lustre – High-Performance Option

There are many applications that demand high performance from SageMaker ML storage. An important class is when training ML models on massive datasets that require ultra-fast read speeds. This is extended to workloads that need low-latency and high-throughput parallel data access.

Amazon built FSx for Lustre to provide the data access speeds that these applications need. It is more expensive than S3 or EBS, but it provides data access speeds that these services cannot deliver. Engineers must also carefully plan their storage capacity since pricing is based on provisioned storage.

An essential advance for FSx is that it seamlessly integrates with S3, allowing it to hold data when ML uses it for training. Otherwise, it offloads this data back to S3, allowing engineers to only to provision storage that is sufficient for applications. Engineers can optimize costs by intelligently combining S3 with FSx for Lustre.

4. Best Practices for SageMaker ML Storage

This is the core of the article. While the selection of SageMaker ML Storage options is essential, their correct utilization is crucial to ensure ML operates smoothly.

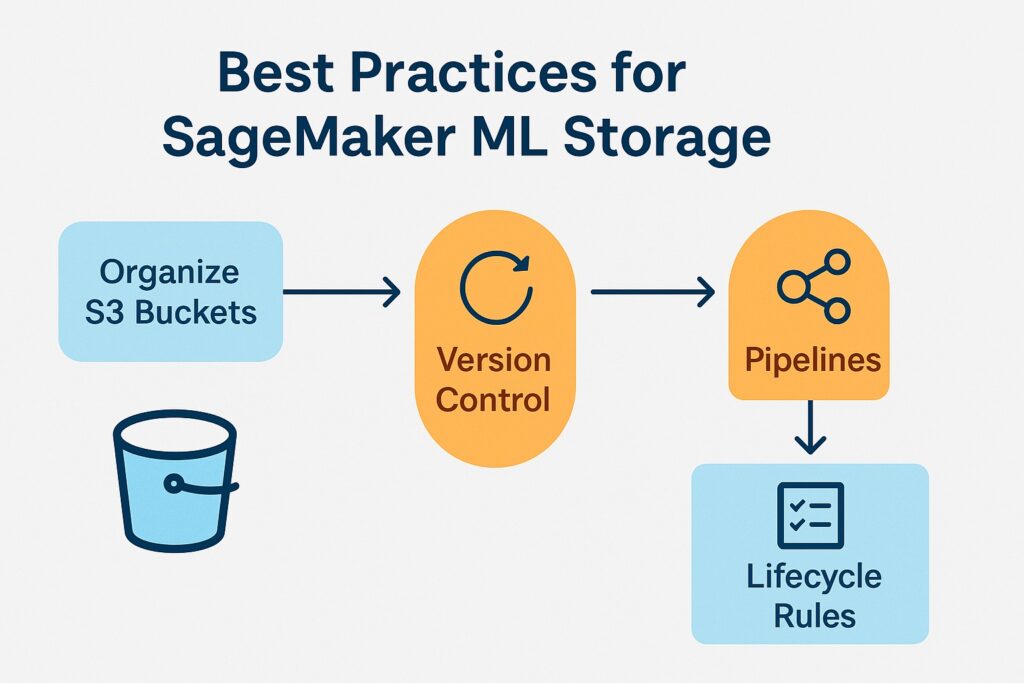

4.1 Organize S3 Buckets Logically

SageMaker ML applications using S3 depend on the correctly organizing stored in S3 buckets. Within the ML domain, there are primarily two data categories: datasets that are primarily textual and artifacts. Artifacts are binary objects like images and video. Therefore we must correctly manage these separately for optimal data access speeds.

A consistent naming convention for S3 objects is crucial for algorithms not to waste processing time guessing which objects to access. Closely associated with this is setting up folder structures so that ML algorithms do not need to search for objects. Searching for objects further wastes processing time.

4.2 Use Version Control and Pipelines

Recently, the ML field has adopted versioning, overcoming many issues around maintaining ML models similar to software maintenance. Whereas software faces changing requirements, ML models face changing environments, shifting from those they were initially trained with. Also, both software and ML models need versioning for troubleshooting in replicating the conditions causing defects.

SageMaker Pipelines adopts the same principles as software CI/CD, automating the entire ML model lifecycle. Hence, applying versioning to stored datasets is crucial, and engineers must adopt a versioning strategy along with the storage options they select. Even when they do not use S3 for training, they should use it for archiving and version-archived datasets and models. Engineers can also leverage object versioning provided by S3.

4.3 Optimize for Cost and Performance

There are many opportunities for engineers to optimize the cost and performance of SageMaker ML storage. When they need to use Lustre for Fx for high-performance applications, then they can leverage its seamless integration with S3. This tag teams S3 and Lustre so that they can minimize the provisioned store for Lustre and minimize costs. They achieve this while still delivering the needed data access performance.

ML model lifecycle management has evolved, and dataset lifecycle management is an important component of this. Therefore, engineers must establish lifecycle rules for both the ML model and their training datasets and leverage S3 lifecycle management. S3 provided intelligent tiering, removing the need to manage object lifecycles manually and further automating SageMaker Pipelines.

5. Speeding Up ML Workflows with SageMaker ML Storage

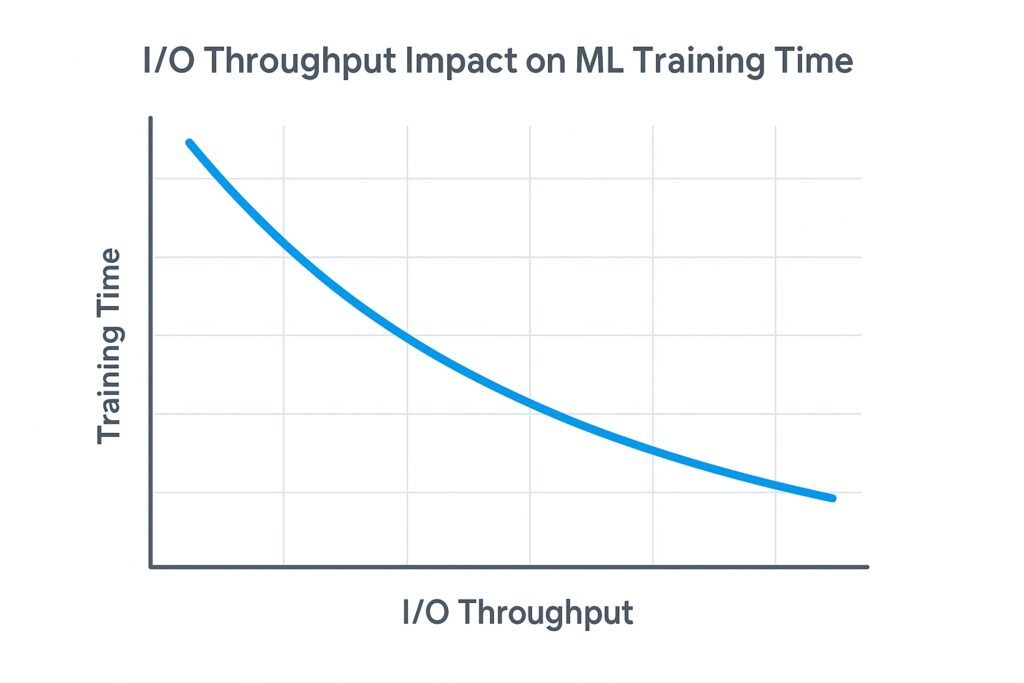

The key consideration for SageMaker ML storage is I/O throughput because it directly affects training speed. Whenever there is low I/O throughput, then training jobs have to wait for data, which slows down progress. High data input speed is necessary to maximize the utilization of high-performance CPUs and GPUs, which requires high-throughput storage. Whereas insufficient data throughput levels lead to bottlenecks, increasing total training time and cloud costs.

We have seen that selecting the SageMaker ML storage option with the right data throughput is crucial. However, there are strategies that also improve data throughput on top of the right storage option. Prefetching is a simple strategy that loads data in advance and reduces the wait times during training. Sharding also improves throughput by splitting data across multiple sources and enabling parallel access during training.

FSx for Lustre provides high performance for ML training and inference that S3 or EBS cannot provide. Training jobs that involve deep learning models on large image datasets requiring high I/O throughput are one example. Another is ML models that accelerate genomics research to process massive datasets quickly. Financial risk models that rely on high-frequency data need a reduced turnaround time that FSx for Lustre can provide.

6. Data Security and Compliance for SageMaker ML Storage

Data throughput is central to SageMaker ML storage, but other aspects are equally important, including data security and regulatory compliance.

It is good practice to configure encryption for data at rest for all storage services unless we can obtain a special exemption. All the AWS storage services we have explored support built-in encryption, and all these have a minimal effect on latency. They all integrate with AWS KMS to protect data at rest. EBS also allows customer-managed KMS keys, while FSx for Lustre also supports data in transit.

Another key practice is access control to the stored data. These storage services are integrated with AWS IAM roles to provide authentication and authorization.

Security best practices include logging, especially for any forensic or auditing activities. Integration with AWS CloudWatch provides this ability.

It is essential to follow these best practices in protecting sensitive data. This is especially true in a strict regulatory environment around healthcare and finance.

7. Common Pitfalls to Avoid with SageMaker ML Storage

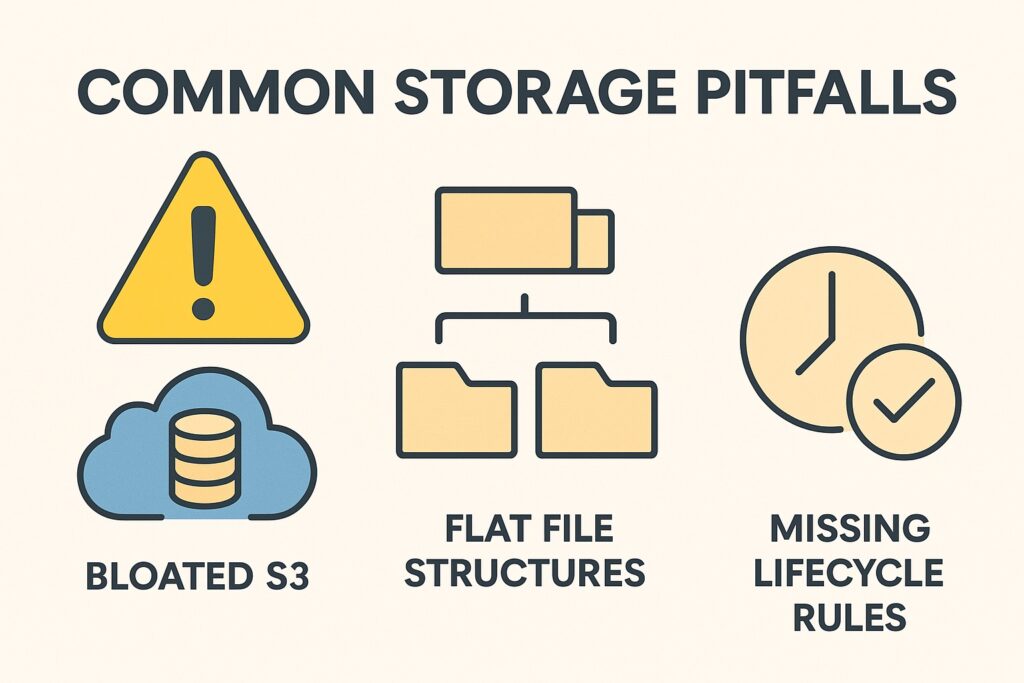

Along with best practices, there are common pitfalls with SageMaker ML storage that we should avoid. S3 has several storage categories and storing everything in S3 Standard is a storage smell and adds unnecessary costs. We discussed designing folder structures earlier, and having flat structures without prefixes will slow down data retrieval. Also, failing to implement lifecycle rules will lead to bloated, unorganized buckets, adding unnecessary costs and slowing data retrieval. Associated with this is the failure to separate datasets from artifacts that will also cause access delays.

While we should preserve earlier datasets, keeping outdated datasets in storage classes other than the cheapest Glacier option is wasteful.

We have already discussed that we match the storage option with the data access speed that the SageMaker application requires. Using a storage option that has an access speed greater than what is needed is overpaying for storage. This is a poor practice and is also wasteful.

Many applications will experience growth, and sound engineering includes planning for scale and changing access patterns. Failure to plan will result in performance degradation and cost overruns.

8. Final Thoughts and Action Steps

SageMaker ML storage is a crucial element of the SageMaker ML workflow in terms of facilitating data throughput. There are several options available that provide different throughput versus cost tradeoffs. ML engineers should make intelligent choices based on data throughput needs and planning for scaling and access pattern changes. They must also implement the right storage strategy and not squander the benefits of the chosen storage option.

ML model maintenance also involves changing storage needs over the ML model’s lifecycle. Lifecycle best practices include regular audits of the current SageMaker storage setup and whether it still matches storage needs.

Below are references and further reading for you to continue on your SageMaker ML storage journey.

9. Further Reading

Continue sharpening your knowledge of SageMaker, ML workflows, and cloud architecture with these recommended reads:

- Designing Data-Intensive Applications – A must-read on storage systems, scalability, and throughput for ML engineers working with big data.

- AWS Certified Machine Learning Specialty Exam Guide – Useful for readers pursuing the AWS ML Specialty certification, with detailed content on SageMaker storage strategies.

- Cloud Architecture Patterns – Offers insights into scalable design and storage choices in cloud-native architectures, including ML use cases.

🔗 Affiliate Disclaimer

As an Amazon Associate, I earn from qualifying purchases. This helps support the site at no additional cost to you.

10. References

- Amazon SageMaker Documentation

https://docs.aws.amazon.com/sagemaker/latest/dg/whatis.html - Amazon S3 Storage Classes

https://docs.aws.amazon.com/AmazonS3/latest/userguide/storage-class-intro.html - Amazon EBS Features

https://docs.aws.amazon.com/ebs/latest/userguide/what-is-ebs.html - Amazon FSx for Lustre Overview

https://docs.aws.amazon.com/fsx/latest/LustreGuide/what-is.html - Amazon S3 Lifecycle Configuration

https://docs.aws.amazon.com/AmazonS3/latest/userguide/lifecycle-configuration-examples.html - AWS KMS (Key Management Service)

https://docs.aws.amazon.com/kms/latest/developerguide/overview.html - AWS IAM Access Control for Amazon S3

https://docs.aws.amazon.com/AmazonS3/latest/userguide/s3-access-control.html - AWS CloudWatch Logs

https://docs.aws.amazon.com/AmazonCloudWatch/latest/logs/WhatIsCloudWatchLogs.html - Best Practices for Amazon SageMaker Pipelines

https://docs.aws.amazon.com/sagemaker/latest/dg/pipelines-best-practices.html